Dynamic Face Perception: The Role of Expertise in Dual Processing of Features and Configuration

Yinqi Huang

Published: February 23, 2023 • DOI: https://doi.org/10.33137/juls.v16i1.40382

Abstract

Face perception is the basis of many types of social information exchange, but there is controversy over its underlying mechanisms. Researchers have theorized two processing pathways underlying facial perception: configural processing and featural processing. Featural processing focuses on the individual features of a face, whereas configural processing focuses on the spatial relations of features. To resolve the debate on the relative contribution of the two pathways in face perception, researchers have proposed a dual processing model that the two pathways contribute to two different perceptions, detecting face-like patterns and identifying individual faces. The dual processing model is based on face perception experiments that primarily use static faces. As we mostly interact with dynamic faces in real life, the generalization of the model to dynamic faces will advance our understanding of how faces are perceived in real life. This paper proposes a refined dual processing model of dynamic face perception, in which expertise in dynamic face perception supports identifying individual faces, and it is a learned behaviour that develops with age. Specifically, facial motions account for the advantages of dynamic faces, compared to static faces. This paper highlights two intrinsic characteristics of facial motions that enable the advantages of dynamic faces in face perception. Firstly, facial motion provides facial information from various viewpoints, and thus supports the generalization of face perception to the unlearned view of faces. Secondly, distinctive motion patterns serve as a cue to the identity of the face.

Introduction

Face perception is the basis of many types of social information exchange because faces provide visual information vital for social interaction, such as group membership, emotional states, and attention1,2. Both behavioural and neurobiological evidence suggest that there is a complex mechanism underlying face perception, but there is a debate on the cognitive processes that comprise it. Researchers have theorized two processing pathways: featural processing and configural processing3-6. Featural processing focuses on the individual features of a face, whereas configural processing focuses on the spatial relations of the features. These two pathways have different development rates7-9 and are associated with different ERP markers10,11 and different brain regions12-14. Because the two pathways have distinguishable behavioural outcomes and neuroanatomical localization, the two pathways are considered separable yet intertwined.

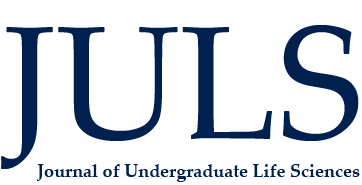

Figure 1. Configural and featural processing in the spectrum view and the dual process view. Configural processing encodes both facial features and spatial distance, whereas featural processing encodes individual facial characteristics, such as the shapes of the eyes, nose, and mouth. The spectrum view suggests either strategy is sufficient for face perception, while the dual processing model argues both are necessary for different levels of perception. The fast route, supported by configural processing, enables rapid face detection, while the slow route, supported by featural processing, enables goal-driven face identification. Graphics created with BioRender.com.

Though researchers agree that the two pathways both contribute to face perception, they have been debating the relative contributions of the two. Some support a configuration-dominant view that face perception is dominated by the perception of the configuration of features4,15, while others argue for a feature-dominant view that isolated features can support face perception16 and are processed independently from the configuration17. Some researchers also take on a moderate view that both pathways contribute to face perception, with configural-processing playing the main role5,6. To settle this debate, de Gelder theorized a dual processing model, which states that the two pathways contribute to different levels of face perception18. However, de Gelder’s model is based on face perception experiments that use primarily static faces. As we mostly interact with dynamic faces in real life, generalization of the model to dynamic faces will advance our understanding of how faces are perceived in real life. Researchers have observed that dynamic faces are more identifiable than static faces, and they have proposed factors that explain this advantage19-21. Built on the dual processing model, this paper proposes a refined model in which expertise of dynamic faces supports face perception. This paper first reviews configural processing and featural processing; it then discusses behavioural and neurobiological evidence supporting the dual processing model; finally, it reviews behavioural studies that support the refined dual processing model. The refined model proposes that dynamic face perception supports face identification and it is a learned behaviour that develops with age.

Featural Processing versus Configural Processing

Featural processing in face perception refers to the theorized processing strategy where the characteristics of individual facial features, such as the shapes of the eyes, nose, and mouth are encoded, whereas configural processing refers to the strategy where the features along with spatial distance are encoded. Researchers managed to discern three types of configural processing: first-order processing, second-order processing, and holistic processing22. First-order refers to the relative position of features that generates a face-like stimulus (e.g., two eyes above a mouth), whereas second-order refers to the spatial distances among features, contributing to the individualization of faces. Holistic processing refers to the process that integrates features into a gestalt. This paper refers to all three types of processing as configural processing to avoid verbiage, because the discussion focuses on the higher level of categorization on configural processing and featural processing.

Though some might argue that it is impossible to separate featural processing from configural processing because configural processing always involves some degree of featural representation, there are techniques to prioritize the employment of one of the processing pathways. For example, Lobmaier and his colleagues designed a blurred face stimulus where the featural configuration was preserved, but the blurred features were unidentifiable23. The assumption was that the study participants would prioritize the processing of configural information because featural information is not informative. Similarly, he designed a scrambled face stimulus where the facial features were intact, but the configuration of features was scrambled23, thus participants would prioritize featural processing. Together with a clinical face-blind condition, congenital prosopagnosia, the researchers were able to partition the two pathways behaviourally. Congenital prosopagnosia refers to the impairment of face perception that is evident from early childhood in the absence of manifest brain injury. Lobmaier found that prosopagnosic patients performed worse in identifying familiar faces when they were blurred than scrambled23, suggesting the deficit happened when the patients employed configural processing while featural processing was discouraged by blurred facial features. Another technique is to crop a face stimulus horizontally and misalign the top- and bottom-half of the face, thus facial features were preserved, but the featural configuration was distorted. Compared to controls, prosopagnosic patients paradoxically performed similarly on identifying aligned and misaligned faces24, suggesting that with intact features, they employed featural processing which did not hinder face perception. Together, the studies demonstrate that prosopagnosic patients process featural and configural information independently, and that researchers can design the experiment in a way that participants selectively employ one of the two processing pathways.

Not only can the two processing pathways be partitioned behaviourally, but neurobiological evidence supports the separation of the two pathways. Researchers have identified different Event-Related Potentials (ERPs) associated with configural processing and figural processing, respectively10,11. Mercure reported that P1 ERP components were more sensitive to the changes in the configuration of face stimuli, whereas P2 was more sensitive to featural changes10. P300 was more sensitive to featural changes, mediating through attention10. This evidence shows that featural and configural changes are processed at different times and are associated with different neural coding. Additionally, using functional magnetic resonance imaging (fMRI), researchers have identified brain regions that correlate with configural processing and featural processing, respectively12,13. The left fusiform gyrus, the left parietal lobe, and the left lingual gyrus activated when participants were processing configural information, whereas the middle temporal gyrus activated when processing featural information13. ERPs and fMRI data can reveal correlation relationships, whereas brain stimulation techniques such as Transcranial Magnetic Stimulation (TMS) provide strong evidence because they establish a causal link through stimulating brain nerve cells with a series of short magnetic pulses, linking the stimulated brain region with behavioural changes. A study using TMS demonstrated a double dissociation of brain regions for configural processing and featural processing14. The researchers applied TMS during a face identification task, and they found that applying TMS on the left middle frontal gyrus only interfered with featural processing, whereas applying TMS on the right inferior frontal gyrus interfered with configural processing14. Together with the observation that the two processing pathways mature at different rates in children7-9,22, it is undeniable that the two processing pathways have separate neural underpinnings and behavioural outcomes.

Figure 2. Temporal and spatial precision of EEG, fMRI, and TMS in studying face perception. EEG has exceptional temporal precision, allowing for the detection of fast changes in whole brain activity related to face processing. However, EEG has relatively poor spatial precision and cannot be used to pinpoint the exact brain regions. In contrast, fMRI has excellent spatial precision, which can be useful to identify the precise location related to face perception. However, fMRI has relatively poor temporal precision, making it less suitable for studying fast neural changes. TMS, on the other hand, has excellent temporal precision and can study fast neural changes and establish a causal link between stimulated regions and behavioural changes. However, TMS has relatively poor spatial precision because it may affect neighboring regions around the targeted brain region. The method chosen is dependent on the research question and specific neural process being studied in face perception. Graphics created with BioRender.com.

The Dual Process Model of Face Perception

Though researchers agree that both processing pathways contribute to face perception, they have been debating the relative contributions of the two. Their views fall on a spectrum. On one end, some researchers support the configuration-dominant view that face perception is dominated by the perception of the configuration of features4,15; on the other, researchers argue for the feature-dominant view that isolated features can support face perception16 and are processed independently from the configuration17. Some researchers also take a moderate position that both pathways contribute to the same level of face perception, with configural-processing playing the main role5,6. To propose an alternative to this debate, de Gelder disregarded the spectrum framing entirely and proposed a dual processing model in which both processing pathways are necessary but contribute to different levels of face perception18.

The dual processing model states that face perception comprises two systems: face detection and face identification18. Face detection refers to the ability of detecting face-like patterns, whereas face identification refers to the ability of identifying individual faces. The separation of face detection and face identification explains the paradoxical observation that prosopagnosic patients who had normal face detection could not identify familiar faces18, as the model proposes that the two systems are functionally dissociable. The separation of the two systems is also supported by ERP evidence that facial structures were encoded before face identification25. Furthermore, the model proposes that face detection is supported by a fast route and face identification is supported by a slow route18. The characteristics of configural processing and featural processing also support the fast and slow route proposals. Face detection is a rapid process sensitive to external stimuli such as colour and shape, and it only encodes coarse-grained representations of the stimuli, such as the configuration of face-like patterns. On the contrary, the slow route contributes to face identification, or the ability of identifying individual faces. It is a voluntarily goal-driven process and is supported by fine-grained representations, such as featural details of a face.

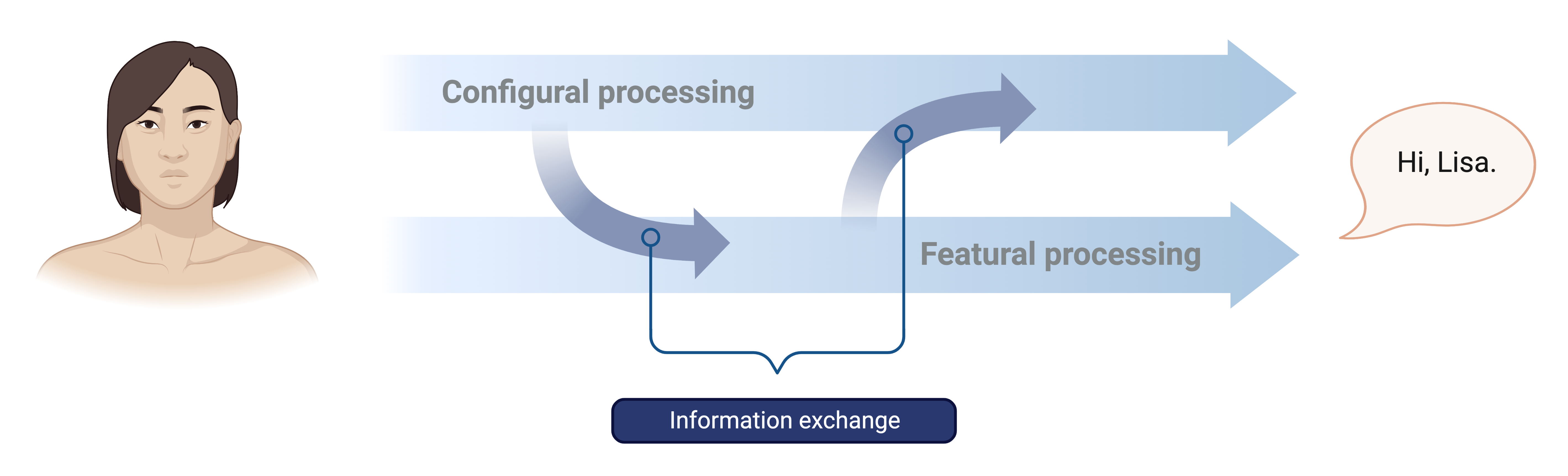

Figure 3. Information exchange during dual processing of face perception. The dual processing model suggests parallel processing, but de Gelder emphasizes dynamic information exchange between configural and featural representations.

The above interpretation of the dual processing model implies that configural processing and featural processing are parallel. However, de Gelder instead emphasized that this is a dynamic model, and thus the information of featural representation and configural representation are exchanged as output between the face detection system and face identification system18. Most interestingly, de Gelder proposed that face detection requires limited stimulus exposure, whereas face identification depends on extensive exposure and shares processing resources with object recognition. This is supported by the evidence that newborns who had not seen a face tracked face-like pattern more than scrambled configuration26, suggesting that detection of face-like patterns is an innate response. On the other hand, the accuracy of identifying familiar upright faces improves linearly from 5 to 18 years old27. In addition, the improvement increases more rapidly than identifying inverted faces, suggesting that identifying individual faces is constantly developing with age, likely due to more exposure to upright faces and improved memory27.

A Refined Model of Dynamic Face Perception

Built on the dual processing model and de Gelder’s proposal on face expertise18, this paper proposes a refined model in which face identification is determined by the expertise of dynamic faces, demonstrated by behavioural evidence below. Though de Gelder designed a comprehensive model, his model is based on face perception experiments that used primarily static faces. As we mostly interact with dynamic faces in real life, generalizing his model to dynamic faces will advance our understanding of how faces are perceived in real life.

A piece of direct evidence of the difference between static face perception and dynamic face perception is that a prosopagnosic patient, who had impairment in identifying familiar static faces, was able to identify dynamic faces19, suggesting that characteristics unique to dynamic faces also supports face identification, aside of static facial features and featural configuration. Moreover, unfamiliar faces learned in motion were correctly identified more than static faces20, suggesting that dynamic faces are more identifiable than static faces.

An interesting interpretation of the underlying mechanism of facial motions’ advantage is that dynamic faces are not encoded better because of intrinsic characteristics of motions, but rather social animals pay more attention to moving faces, making them more memorable28. However, this paper summarizes two consensus characteristics of facial motions that enable this advantage. O’Toole and her colleagues proposed that changeable motion-based information in dynamic faces provides rich communication signals supplementary to static faces, which facilitate face perception under non-optimal viewing conditions29. This paper further develops the proposal by arguing that the distinctive characteristics of facial motions account for the advantages of dynamic faces in face perception.

Firstly, facial motions provide facial information from various viewpoints. Identification of static faces demonstrates dependency on the learned view of face stimuli30. Familiarity of the learned view does not support identification of the unlearned view of the same face, whereas head rotations support generalization to both upright and inverted orientation30, suggesting that three-dimensional facial information reduces viewpoint dependence. Secondly, motion-based facial expressions facilitate face identification. For example, Lander and her colleagues found that distinctive motion patterns, such as smiling and talking, serve as an additional cue to the identity of the face31. Familiarity of dynamic faces correlates with the distinctiveness of facial motions32, suggesting that faces moving in a distinctive manner benefit the most from being seen in motion. Another piece of evidence is that the parents of monozygotic twins, or identical twins, were able to distinguish between their own twins’ who they interacted with in real life33. Given that facial features and configurations are identical for the twins, this finding suggests that the familiarity of the twins’ facial motions contributes to the successful identification of their faces.

Based on the refined model that expertise of dynamic faces accounts for the advantages of dynamic faces, we should expect that the dynamic face perception would be more successful when the observer is more familiar with the facial motion. This prediction is supported by the finding that infants gradually develop a looking preference for dynamic faces over dynamic abstract stimuli with age34. This preference appears later than the preference for static faces, indicating that the perception of dynamic faces is a learned behaviour.

Discussion

In conclusion, built on the dual processing model that integrates featural processing and configural processing, this paper proposes a refined model of dynamic face perception by drawing evidence from the functional roles of facial motions and the gradual acquisition of preference for dynamic faces in infants. As we are more likely to encounter moving faces in real life, for future studies, it would be interesting to investigate whether the refined expertise model could predict dynamic face perception in both face detection and face identification task An interesting direction would be investigating whether the expertise of the dynamic faces of similar-looking individuals (e.g., the familiarity of identical twins’ continuous facial movements) contributes to the identification of their faces, as identical twins’ parents are slower at distinguishing between unfamiliar twins than between their own twins’ who they interact with in real life, when looking at photographs after learning33. With the recent advancement in photo-realistic morphing techniques and synthetic facial animations, dynamic face stimuli are more controllable as the new techniques allow for sophisticated manipulation of facial characteristics and motions. Therefore, adopting dynamic face stimuli in face perception experiments will be a novel and promising direction35.

Moreover, generalizing the dual processing model to dynamic faces will provide a stepping stone to constructing computational models that capture the cognitive process underlying dynamic face perception. Based on David Marr’s proposal on connecting computational, the algorithmic, and the implementational level of human visual processing36, if we understand how featural and configural information of dynamic faces are processed, we can construct computational models of the cognitive process of face perception in the real world situations. This can be done through, for example, connecting behavioural and neural data of dynamic face perception by capturing non-observable variables using methods such as Bayesian inference37.

Separately , modelling the cognitive processing strategies on how humans perceive and distinguish similar-looking individuals will allow for the optimization of face recognition algorithms which label images of faces. Similar to the human perception of identical twins, human observers who interact with dynamic faces still outperform computer classifiers that are trained on static faces in identifying identical twins’ faces38,39. Thus, uncovering the processing strategies may allow for the optimization of face recognition algorithms as modern artificial neural networks’ architectures frequently get inspiration from animal brain models39. Notable examples include Spiking Neural Networks (SNN) inspired by synaptic transmission41, Convolutional Neural Networks (CNN) inspired by visual processing system42, and Recurrent Neural Networks (RNN) inspired by the backpropagation of errors in the brain43,44.

Acknowledgements

Funding Statements

The author has no funding statements to declare.

Declaration of Competing Interests

The author has no competing interests to declare.

The author would like to express gratitude to the esteemed reviewers, whose thoughtful and constructive feedback greatly enhanced the quality of this manuscript. Appreciation is also extended to the dedicated editorial teams for their invaluable assistance throughout the submission and review process.

Author Information

Authors and Affiliations

Department of Psychology, University of Toronto, Toronto, ON, Canada

Yinqi Huang

Corresponding Author

Please direct correspondence to Yinqi Huang.

References

1. Leopold, D. A. & Rhodes, G. A comparative view of face perception. Journal of Comparative Psychology 124, 233–251 (2010). https://doi.org/10.1037/a0019460

2. Bruce, V. & Young, A. Face Perception. (Psychology Press, 2013). https://doi.org/10.4324/9780203721254

3. Sergent, J. An investigation into component and configural processes underlying face perception. British Journal of Psychology 75, 221–242 (1984). https://doi.org/10.1111/j.2044-8295.1984.tb01895.x

4. Tanaka, J. W. & Farah, M. J. Parts and Wholes in Face Recognition. The Quarterly Journal of Experimental Psychology Section A 46, 225–245 (1993). https://doi.org/10.1080/14640749308401045

5. Tanaka, J. W. & Sengco, J. A. Features and their configuration in face recognition. Memory & Cognition 25, 583–592 (1997). https://doi.org/10.3758/BF03211301

6. Farah, M. J., Wilson, K. D., Drain, M. & Tanaka, J. N. What is “special” about face perception? Psychological Review 105, 482–498 (1998). https://doi.org/10.1037/0033-295X.105.3.482

7. Carey, S. & Diamond, R. From Piecemeal to Configurational Representation of Faces. Science 195, 312–314 (1977). https://www.science.org/doi/10.1126/science.831281

8. Tanaka, J. W., Kay, J. B., Grinnell, E., Stansfield, B. & Szechter, L. Face Recognition in Young Children: When the Whole is Greater than the Sum of Its Parts. Visual Cognition 5, 479–496 (1998). https://doi.org/10.1080/713756795

9. Mondloch, C. J., Le Grand, R. & Maurer, D. Configural Face Processing Develops more Slowly than Featural Face Processing. Perception 31, 553–566 (2002). https://doi.org/10.1068/p3339

10. Mercure, E., Dick, F. & Johnson, M. H. Featural and configural face processing differentially modulate ERP components. Brain Research 1239, 162–170 (2008). https://doi.org/10.1016/j.brainres.2008.07.098

11. Bentin, S., Allison, T., Puce, A., Perez, E. & McCarthy, G. Electrophysiological Studies of Face Perception in Humans. Journal of Cognitive Neuroscience 8, 551–565 (1996). https://doi.org/10.1162/jocn.1996.8.6.551

12. Rotshtein, P., Geng, J. J., Driver, J. & Dolan, R. J. Role of Features and Second-order Spatial Relations in Face Discrimination, Face Recognition, and Individual Face Skills: Behavioral and Functional Magnetic Resonance Imaging Data. Journal of Cognitive Neuroscience 19, 1435–1452 (2007). https://doi.org/10.1162/jocn.2007.19.9.1435

13. Lobmaier, J. S., Klaver, P., Loenneker, T., Martin, E. & Mast, F. W. Featural and configural face processing strategies: evidence from a functional magnetic resonance imaging study. NeuroReport 19, 287–291 (2008). doi: 10.1097/WNR.0b013e3282f556fe

14. Renzi, C. et al. Processing of featural and configural aspects of faces is lateralized in dorsolateral prefrontal cortex: A TMS study. NeuroImage 74, 45–51 (2013). https://doi.org/10.1016/j.neuroimage.2013.02.015

15. Bartlett, J. C. & Searcy, J. Inversion and Configuration of Faces. Cognitive Psychology 25, 281–316 (1993). https://doi.org/10.1006/cogp.1993.1007

16. Rakover, S. S. & Teucher, B. Facial inversion effects: Parts and whole relationship. Perception & Psychophysics 59, 752–761 (1997). https://doi.org/10.3758/BF03206021

17. Macho, S. & Leder, H. Your eyes only? A test of interactive influence in the processing of facial features. Journal of Experimental Psychology: Human Perception and Performance 24, 1486–1500 (1998). https://doi.org/10.1037/0096-1523.24.5.1486

18. de Gelder, B. & Rouw, R. Beyond localisation: a dynamical dual route account of face recognition. Acta Psychologica 107, 183–207 (2001). https://doi.org/10.1016/S0001-6918(01)00024-5

19. Lander, K., Humphreys, G. & Bruce, V. Exploring the Role of Motion in Prosopagnosia: Recognizing, Learning and Matching Faces. Neurocase 10, 462–470 (2004). https://doi.org/10.1080/13554790490900761

20. Butcher, N., Lander, K., Fang, H. & Costen, N. The effect of motion at encoding and retrieval for same- and other-race face recognition: The effect of facial motion on same- and other-race recognition. British Journal of Psychology 102, 931–942 (2011). https://doi.org/10.1111/j.2044-8295.2011.02060.x

21. Curio, C., Bülthoff, H. H. & Giese, M. A. Dynamic Faces: Insights from Experiments and Computation. (MIT Press, 2010). https://doi.org/10.7551/mitpress/9780262014533.001.0001

22. Maurer, D., Grand, R. L. & Mondloch, C. J. The many faces of configural processing. Trends in Cognitive Sciences 6, 255–260 (2002). https://doi.org/10.1016/S1364-6613(02)01903-4

23. Lobmaier, J. S., Bölte, J., Mast, F. W. & Dobel, C. Configural and featural processing in humans with congenital prosopagnosia. Advances in Cognitive Psychology 6, 23–34 (2010). doi: 10.2478/v10053-008-0074-4

24. Avidan, G., Tanzer, M. & Behrmann, M. Impaired holistic processing in congenital prosopagnosia. Neuropsychologia 49, 2541–2552 (2011). doi: 10.1016/j.neuropsychologia.2011.05.002

25. Bentin, S. & Deouell, L. Y. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology 17, 35–55 (2000). https://doi.org/10.1080/026432900380472

26. Goren, C. C., Sarty, M. & Wu, P. Y. Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics 56, 544–549 (1975). https://pubmed.ncbi.nlm.nih.gov/1165958/

27. Hills, P. J. & Lewis, M. B. The development of face expertise: Evidence for a qualitative change in processing. Cognitive Development 48, 1–18 (2018). https://doi.org/10.1016/j.cogdev.2018.05.003

28. Lander, K. & Bruce, V. The role of motion in learning new faces. Visual Cognition 10, 897–912 (2003). https://doi.org/10.1080/13506280344000149

29. O’Toole, A. J., Roark, D. A. & Abdi, H. Recognizing moving faces: a psychological and neural synthesis. Trends in Cognitive Sciences 6, 261–266 (2002). https://doi.org/10.1016/S1364-6613(02)01908-3

30. Hill, H. Information and viewpoint dependence in face recognition. Cognition 62, 201–222 (1997). https://doi.org/10.1016/S0010-0277(96)00785-8

31. Lander, K. & Chuang, L. Why are moving faces easier to recognize? Visual Cognition 12, 429–442 (2005). https://doi.org/10.1080/13506280444000382

32. Butcher, N. & Lander, K. Exploring the Motion Advantage: Evaluating the Contribution of Familiarity and Differences in Facial Motion. Quarterly Journal of Experimental Psychology 70, 919–929 (2017). https://doi.org/10.1080/17470218.2016.1138974

33. Sæther, L. & Laeng, B. On Facial Expertise: Processing Strategies of Twins’ Parents. Perception 37, 1227–1240 (2008). https://doi.org/10.1068/p5833

34. Hunnius, S. & Geuze, R. H. Developmental Changes in Visual Scanning of Dynamic Faces and Abstract Stimuli in Infants: A Longitudinal Study. Infancy 6, 231–255 (2004). https://www.tandfonline.com/doi/abs/10.1207/s15327078in0602_5

35. Dobs, K., Bülthoff, I. & Schultz, J. Use and Usefulness of Dynamic Face Stimuli for Face Perception Studies—a Review of Behavioral Findings and Methodology. Frontiers in Psychology 9, (2018). https://doi.org/10.3389/fpsyg.2018.01355

36. Marr, D. Vision: a computational investigation into the human representation and processing of visual information. (MIT Press, 2010).

37. Wilson, R. C. & Collins, A. G. Ten simple rules for the computational modeling of behavioral data. eLife 8, e49547 (2019). https://doi.org/10.7554/eLife.49547

38. Biswas, S., Bowyer, K. W. & Flynn, P. J. A study of face recognition of identical twins by humans. in 2011 IEEE International Workshop on Information Forensics and Security 1–6 (2011). doi:10.1109/WIFS.2011.6123126.

39. Paone, J. R. et al. Double Trouble: Differentiating Identical Twins by Face Recognition. IEEE Transactions on Information Forensics and Security 9, 285–295 (2014). https://ieeexplore.ieee.org/document/6693698

40. Zador, A. M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nature Communications 10, 3770 (2019). https://doi.org/10.1038/s41467-019-11786-6

41. Maass, W. Networks of spiking neurons: The third generation of neural network models. Neural Networks 10, 1659–1671 (1997).

42. LeCun, Y. & Bengio, Y. Convolutional networks for images, speech, and time series. in The handbook of brain theory and neural networks 255–258 (MIT Press, 1998).

43. Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

44. Hochreiter, S. & Schmidhuber, J. Long Short-Term Memory. Neural Computation 9, 1735–1780 (1997).

,